Showcasing the Enhancement of User Experience with Conversational AI - Part 2

Part 2 is the implementation story—deploying the AI assistant with AWS, keeping secrets safe, and ensuring only trusted devices can access it.

Situation

In the first part of the article, we discussed the importance of selecting the right model, considering factors like response time and quality. We also highlighted the significance of including conversation history in API calls to maintain coherent and relevant responses. Furthermore, we explored how fine-tuning GPT-3 models can improve performance by incorporating specific knowledge and details. In the second part, we will focus on the practical steps of integrating a customer support assistant function into an app while minimizing costs.

Let's dive into the heart of the matter.

Picture this: on one side, we have customers engrossed in exploring our apps on their smartphones. On the other, our state-of-the-art GPT models are primed and ready to lend their assistance. The challenge lies in bridging the gap between these two elements and establishing a seamless connection. How do we bridge this gap?

The first step is to establish an application that enables communication between the mobile app users and the GPT models. This application acts as an intermediary, facilitating the flow of information and requests between the two parties.

The application is designed to receive user requests or queries from the mobile app. These requests can range from simple inquiries to more complex interactions that require assistance from the GPT models.

Once the user request is received, the application interacts with the GPT models by making API calls. This involves sending the user query and any relevant context or conversation history to the GPT models for processing and generating a response.

After making the API call, the application processes the response received from the GPT models. This may involve extracting and formatting the relevant information or response generated by the GPT models.

Finally, the application sends the generated response back to the mobile app, ensuring timely delivery of the assistance provided by the GPT models. The user can then receive the personalized and accurate support they need within the app.

Serverless solution

Performing this kind of task, whether simple or complex, requires appropriate computing resources. When it comes to selecting a provider for these resources, the field is highly competitive, with several worthy contenders. Giants like Google Cloud Platform (GCP), Microsoft Azure, and Amazon Web Services (AWS) offer services that are suitable for different requirements and workloads. For the purposes of our exploration, however, I'll focus primarily on AWS, although the concepts can be transferred to analogous services on other platforms.

In the realm of cloud computing, AWS presents two main solutions: EC2, a virtual server-based service, and Lambda, a serverless computing service. It's important to mention here that both GCP and Azure have their equivalents, too, with GCP offering Compute Engine and Cloud Functions, while Azure provides Azure Virtual Machines and Azure Functions. I won't delve into the specifics of these services, but the comparison of AWS's EC2 and Lambda in the following paragraphs should give a good grounding in the general principles.

Choosing between AWS Lambda and EC2 is like choosing between an all-you-can-eat buffet and conveyor belt sushi, aka 回転寿司. With Lambda, you're opting for a serverless architecture, where you only pay for the compute time you consume. There is no charge when your code is not running, making it a cost-effective choice for infrequent workloads or services with variable demand. However, EC2's cost structure is more like traditional web hosting, with a consistent bill that doesn't directly correlate to usage. You pay for the computing capacity you provision, whether or not you utilize it fully, making EC2 more suited for predictable workloads with steady demand.

While both Lambda and EC2 have their respective use cases and benefits, considering the nature of my app, where users could pose questions at any given moment but wouldn't require a server running 24/7, Lambda is the most cost-efficient choice.

Build Lambda Function

AWS Lambda facilitates the usage of several programming languages, including Java, Go, PowerShell, Node.js, C#, Python, and Ruby, enabling a flexible coding environment, in addition to a Runtime API to use any other programming languages not mentioned.

Opting for Python as the programming language for my function was an easy choice due to its lightweight nature. Additionally, Python's extensive library support, which includes numerous third-party libraries, saves considerable time.

In the first segment of this article, I highlighted the available OpenAI endpoints. It's conceivable to manually write code to dispatch requests to these endpoints and handle their respective responses. However, OpenAI conveniently provides a Python library which enables access to these endpoints. This library is a time saver for developers, allowing me to enjoy some well-earned leisure time instead.

Once the development of my function was wrapped up, I decided to integrate it with the Discord bot on our family server. My goal was to alternate between two unique models - one possessing a witty, sarcastic bot and the other adopting a friendly, accommodating personal. These variations were designed to inject an element of humor and variety into the exchanges, encouraging my children to engage with the bot in an amusing and enjoyable manner.

Question: Hi, is Earth flat?

Badbot: Oh, absolutely! The Earth is as flat as a pancake. In fact, all those pictures of our round planet from space are just elaborate Photoshop creations. And don't even get me started on gravity. It's all just a big conspiracy to keep us from falling off the edge.

Goodbot: No, the Earth is not flat. It is an oblate spheroid, which means it is mostly spherical but slightly flattened at the poles and bulging at the equator. This has been proven through various scientific observations and measurements, including satellite imagery, gravity measurements, and the curvature of the Earth's surface.

Once my Python function was up and running on my local server, I could just transfer it straight to AWS as a Lambda function, right? Well, it wasn't quite that straightforward.

To begin with, my Lambda function required access to the OpenAI API key. This required storing the key securely on AWS using the Secret Manager. Additionally, I needed to embed functionality within the Python function that could retrieve this secret API key from AWS. While AWS Secret Manager comes with a price tag, the investment is absolutely worthwhile when considering the potential security risks. For instance, about a decade ago, Uber mistakenly exposed their AWS Access Keys on Github, which resulted in a substantial data breach. Avoiding such scenarios necessitates the secure management of sensitive information like API keys.

For those working with other platforms, equivalent services exist: on Azure, it's Azure Key Vault, and on Google Cloud, it's Secret Manager.

One crucial yet often confusing aspect of Python functions is the utilization of virtual environments. While in the majority of situations, developers can simply copy the Python virtual environment directly to AWS Lambda and expect it to run smoothly, there can be exceptions. For instance, my function relies on the 'cryptography' library, which requires native building. This means that the virtual environment, created on my Mac workstation, is incompatible with Lambda Function's Linux container - it just wouldn't run. It reminds me of Java's age-old slogan, "Write once, run anywhere." It's moments like these when we appreciate the truth in those words.

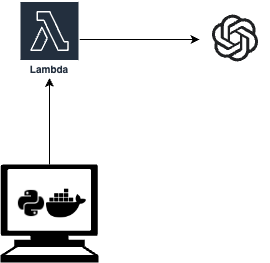

Leveraging today's technology, the solution is surprisingly straightforward: Docker, encapsulating the sentiment "Develop once, Run anywhere." I initiated a Docker container on my local machine using the AWS Lambda container image. Instead of creating the virtual environment on my Mac, I created the Python virtual environment inside this Docker container. This approach ensured the generated libraries were fully compatible with AWS Lambda's environment, solving any potential compatibility issues. Docker effectively bridged the gap between my local development and the Lambda function's operating environment, making the deployment process smooth and hassle-free.

Connecting to the Internet

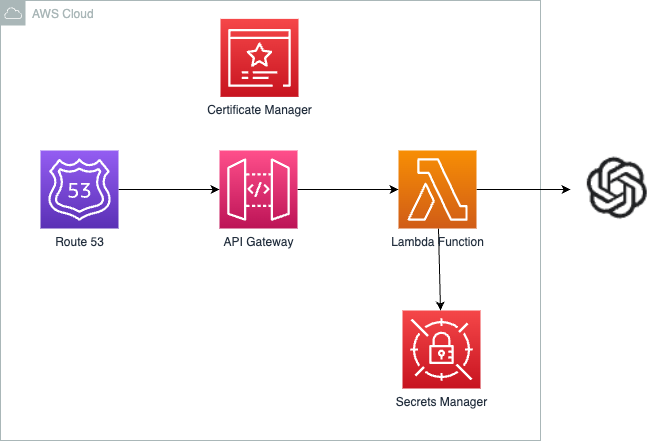

With the Lambda function ready to perform, the next step is to ensure it is accessible over the internet, allowing the application to engage seamlessly. To achieve this, I utilized a trio of AWS services - Route 53 for domain management, API Gateway to handle incoming API calls, and the Certificate Manager to ensure secure, encrypted communication.

API Gateway: API Gateway is a service that allows developers to create, publish, maintain, monitor, and secure APIs at any scale. It acts as a front door for applications to access the Lambda function. It handles all the tasks of accepting and processing concurrent API calls, including traffic management, CORS support, authorization, and access control. This is equivalent to Azure API Management in the Microsoft ecosystem and Google Cloud Endpoints in Google Cloud.

Certificate Manager: The AWS Certificate Manager is required to manage my API's SSL/TLS certificates. These certificates ensure a secure transmission between the client application and the API Gateway. The AWS Certificate Manager simplifies the work of managing SSL/TLS certificates, delegate the task of certificate renewals to AWS. On a parallel note, Microsoft Azure offers Azure Key Vault for secure cloud storage of certificates. At the same time, Google Cloud provides Google Cloud Certificate Authority Service for managing and deploying private certificate authorities.

Route 53: Finally, I made my Lambda function accessible through an easy-to-remember URL. AWS Route 53 is a scalable Domain Name System (DNS) web service that links user queries to AWS-based infrastructures. Using Route 53, I associated my domain name with my API Gateway endpoint. In Microsoft Azure, this service finds its counterpart in Azure DNS. On the other hand, Google Cloud has a similar offering in the form of Google Cloud DNS.

By combining these three services - API Gateway, Certificate Manager, and Route 53 - I exposed my Lambda function to the internet, enabling it to communicate with my app.

Authentication

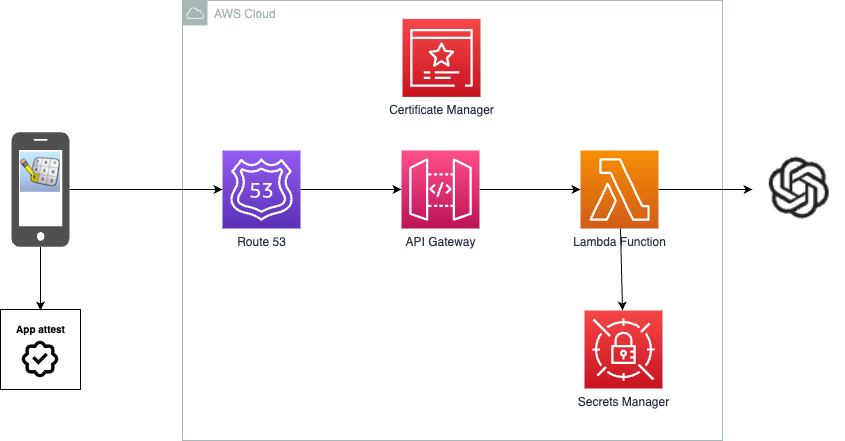

Authentication plays a vital role in ensuring the security and integrity of all applications. It's not merely about providing customer services but ensuring these services are delivered within a secure and protected framework. As such, authentication acts as the principal barrier, validating user identities and allowing only those confirmed customers to access the app's services. This crucial security measure prevents unauthorized access, shielding the application from any potential abuse by unverified internet traffic or malicious actors.

In addition to the 'traditional' authentication and authorization protocols, I took an extra precaution to limit the service only to serve users of my application. I utilized Apple's DeviceCheck service to assert app integrity to achieve this.

The DeviceCheck feature allows iOS developers to assign two binary digits of data per device. When a user accesses my app, it attaches a token to the requests. This token is generated by the DeviceCheck API and represents a unique, anonymous identifier for the device. It doesn't contain any user-identifiable information and maintains user privacy.

Incorporating this level of security demanded coding efforts both on the application and Lambda function side. The mobile app generates and attaches the unique token to the requests. As for the Lambda function, it is responsible for issuing the challenge to the App and validating the token received from the requests. While this process may seem like extra work, it was indeed well worth the effort. This heightened layer of security certifies that only authenticated instances of my app installed on genuine devices can access the service, safeguarding against potential misuse or abuse.

Conclusion

Integrating a customer support assistant function into my app using OpenAI's GPT models was an exciting journey. By leveraging OpenAI's GPT models and serverless computing solutions like AWS Lambda, I provided personalized and accurate assistance to app users while minimizing costs. The process involved building a Lambda function in Python, utilizing AWS services such as API Gateway with secure communication. Additionally, implementing authentication measures, such as Apple's DeviceCheck service, added an extra layer of security, ensuring that only authenticated app instances can access the service. With these steps in place, I created a cost-effective solution that integrated intelligent customer support assistants into my mobile app which can greatly enhance user experiences.