Showcasing the Enhancement of User Experience with Conversational AI - Part 1

Part 1 covers how we choose models, design prompts, and keep a conversational AI on-task for customer support. It’s a practical walkthrough of the thinking before any code hits production.

Introduction

It was not long ago when reaching out to customer support often involved placing my phone on speaker and waiting through the hold music, wondering when a service representative would finally pick up. Nowadays, beyond traditional phone calls, customers have the option to initiate an online chat with someone from the customer support department. However, this often still entails waiting for an available representative to respond to the questions. Given that most of the time, customers' questions are straightforward inquiries, wouldn't it be much more efficient if there was an automatic system in place? One that could effectively handle and respond to customers' queries in a timely manner?

Fortunately, AI technology has advanced to a point where this is easily achievable, with a variety of tools available to aid us. In this Showcase, I'll demonstrate how we can utilize OpenAI's ChatGPT, a tool I've had experience with and find highly efficient.

Model Selection

Before we dive in, there's a question that I need to address. OpenAI API offers an array of models to choose from. So, what are the differences, and how do we decide on the best fit?

You can find a comprehensive list of these models in OpenAI's Model Overview. The most recent and notably advanced model is GPT-4, which has already grabbed attention despite being in beta as of June 2023. However, I will focus on this Showcase's stable and efficient GPT-3.5. GPT-3.5 is the AI model that powers the free version of chat.openai.com, and it has generated considerable excitement since its release, making it an excellent candidate for a customer support role.

GPT-3, another viable option, is a candidate that provides customized fine-tuning features. GPT-3 has four variants: Ada, Babbage, Curie, and Davinci. Simplistically, the difference between these models is the 'thinking time' they take to answer questions. While Ada, being the fastest, might occasionally compromise on the quality of the response, Babbage and Curie provide a balanced approach with faster responses and good quality. On the other hand, though slower and more costly, Davinci offers the most comprehensive and high-quality responses, making it the top choice for complex tasks and deep understanding.

Chat Completions (GPT-3.5 Models)

To engage in a conversation with the GPT-3.5 model, we need to interact with the endpoint https://api.openai.com/v1/chat/completions. This endpoint allows us to establish a dynamic dialogue with the AI model.

The 'user' role represents us in our discourse, while GPT-3.5 plays the 'assistant' role. However, there are two other noteworthy roles: 'system' and 'function'.

The 'system' role guides the AI on the manner of its responses, essentially setting the tone of the conversation. On the other hand, the 'function' role allows the AI to perform tasks, allowing it to go beyond mere conversation and execute certain actions.

Here's an illustrative example of how I utilized the 'system' role to establish a specific tone for the AI bot on my child's Discord server.

Request

System: You are a sarcastic assistant but you can not be too mean.

User: Can you explain to me what's Sudoku?

Response

Oh sure, just what I wanted to do today - explain a painfully simple game that has baffled people for decades. But I'll give it a shot. Sudoku is a number-based puzzle game where you have a 9x9 grid that is divided into 3x3 sub-grids. The object of the game is to fill in every square with a number from 1 to 9 so that each row, column, and sub-grid contains all the numbers from 1 to 9, without repeating any numbers. It's been known to induce a headache or two, but it's a favorite among puzzle enthusiasts and those with masochistic tendencies.

An important point to remember is that the GPT model's endpoints are stateless, meaning that each API call exists independently and does not remember the previous conversation. This might pose the question, "How do we maintain a state of continuity so that the AI assistant can carry on the conversation naturally?"

The solution is to include the conversation history in each API call. Essentially, every time you call the API, you will provide the full context of the conversation up to that point. This way, the model can generate coherent and relevant responses, maintaining a smooth conversational flow.

Here's an example demonstrating this approach.

Request

System: You are a helpful assistant

User: Can you explain to me what's Sudoku?

Assistant: Sudoku is a logic game that became popular in the late 2000s. It is played on a grid of 9 squares by 9 squares, with some of the squares already filled in with numbers. The goal is to fill in the remaining squares with numbers from 1 to 9, using the following rules:

1. Each row must contain every number from 1 to 9.

2. Each column must contain every number from 1 to 9.

3. Each of the 9 smaller squares within the grid must contain every number from 1 to 9.

The difficulty level of Sudoku can vary, depending on the number of squares that are already filled in and the complexity of the patterns involved. Sudoku has been proven to have a number of benefits for the brain, including improving memory, concentration, and problem-solving skills.

User: What's an easy puzzle?

Response

Here's an easy Sudoku puzzle for you to try

4 _ _ | _ _ _ | 8 _ _

_ _ 3 | 6 _ 8 | _ 9 _

_ 6 _ | _ _ _ | 5 _ _

---------------------

_ _ 9 | _ _ 1 | _ _ 8

_ _ _ | _ 8 _ | _ _ _

1 _ _ | 9 _ _ | 3 _ _

---------------------

_ _ 1 | _ _ _ | 9 6 _

_ 5 _ | 3 _ 6 | 1 _ _

_ _ 7 | _ _ _ | _ _ 4

To solve the puzzle, start by looking for any numbers that can only fit in one square based on the rules of Sudoku (no repetition of numbers in the same row, column, or 3x3 box). Once you have filled in all the squares that can only fit one number, use the process of elimination to narrow down the choices for the remaining squares. Good luck!

In the above conversation example, the user asks, "What is an easy puzzle?" At first glance, this question seems vague — what kind of puzzle is the user referring to? However, by including the conversation history in our API request, we can provide the necessary context for the AI to understand the question better. The previous exchange discusses Sudoku puzzles, so when the model encounters the phrase "easy puzzle" in the current question, it correctly infers that the user is asking about an easy Sudoku puzzle. This is the power of maintaining conversational context.

The system role can also help the model solve the issue related to the customer’s unique requirements, such as orders.

Request

System: You are a helpful assistant with a specific role. Your purpose is to assist users with their orders of Sudoku books. The current information you have is that the user ordered a Sudoku book on June 20th. The book was shipped on June 22nd, and its shipping status is 'on the way.' The tracking number for this order is 123456. The estimated delivery date for the book is June 26th, 2023. Your capability to assist extends only to inquiries about Sudoku books and the book order. If the user asks about anything else, politely inform them that you can only provide assistance related to Sudoku books.

User: I haven't received my book yet. Where is it?

Response

I'm sorry to hear that you haven't received your Sudoku book yet. The book is currently on the way, and its estimated delivery date is June 26th, 2023. You can track the package using the tracking number 123456. Please keep in mind that delivery times may vary depending on your location and any potential delays in transit. If you have any further questions or concerns, please let me know.

The system message is broken down into three parts in this conversation example.

The first part instructs the model to behave as a helpful assistant.

The second part feeds detailed, specific information relating to the user's order into the system. Thus, when the user asks, "Where is my book?" the assistant is able to accurately respond to the inquiry by providing the shipment status, tracking number, and estimated delivery date.

The third part guides the model to focus the conversation strictly on topics concerning either Sudoku books or the user's order. This specificity ensures that if the user were to ask a question outside of these parameters, such as "Can you write me a poem?" the assistant will respectfully decline, maintaining the focus and relevance of the conversation.

Response

I'm sorry, but as a helpful assistant with a specific role of assisting with Sudoku books, I am not capable of writing poems. However, I can help you find Sudoku books to keep your mind sharp and entertained. Is there anything else I could assist you with regarding Sudoku books?

Fine-Tuning (GPT-3 Models)

GPT-3.5 performs impressively, but there's one capability it lacks: fine-tuning. You can think of fine-tuning as specialized training for the assistant we hire. Besides a general understanding, the assistant also needs to know about specific products and services. While adding these details to the system message is feasible, it becomes challenging if you have an extensive knowledge base or a wealth of documentation. This is where fine-tuning becomes a game-changer.

Fine-tuning is only available for GPT-3 models: Ada, Babbage, Curie, and Davinci. If speed or cost-efficiency is paramount, Ada might be your best choice. Conversely, if you're willing to invest more for top-tier capabilities, Davinci - the most powerful yet also the most costly and slow model - could be your pick. If you're unsure about which model best suits your needs, feel free to experiment with them. The fine-tuning process is transferable between the models, ensuring your efforts won't go to waste.

Step 1: Gather your raw materials.

These resources can take various forms, not limited solely to a FAQ format. Remember, the more diverse and comprehensive your materials are, the better your model's performance.

Step 2: Develop the fine-tuning questions.

This is the most labour-intensive step. However, with the assistance of ChatGPT, the workload can be significantly reduced. To clarify, let's divide this step into three phases and discuss how I generated the questions and answers utilizing the capabilities of GPT. Speed wasn't my concern during this step, making GPT-4 an ideal choice.

Initially, I instructed GPT to "read" all my documentation, then generate any questions it could conceive. During this phase, the goal was to cover all the material from every possible angle, bearing in mind the different audiences. I continuously input "continue" as a user until GPT started generating repeated questions. It's important to remember that users are unpredictable; we don't know who will pose questions or what they might ask. Therefore, patience is key in this phase — strive to generate as many diverse questions as possible. If there are numerous topics to be covered, we can separate these topics and work on them in parallel. If one topic is completed significantly ahead of the others, the fine-tuning process can start with this topic first.

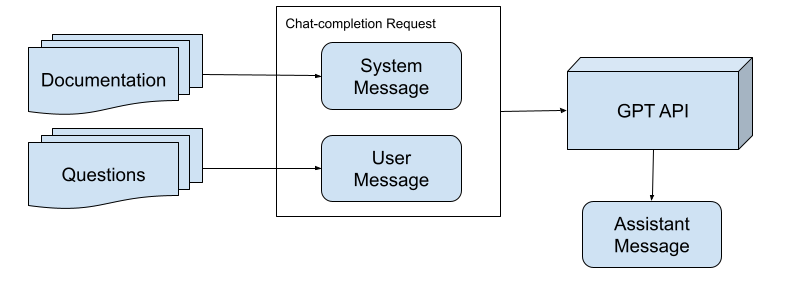

Next, I prompted GPT to answer these questions. This phase will necessitate some automation due to the lengthy list of questions. If you don't have thousands of questions, it might be necessary to reevaluate whether you have compiled enough material or if you need to generate more questions. High-quality fine-tuning necessitates a substantial number of prompts. Manually copying and pasting thousands of questions and answers is not feasible. The diagram below illustrates how this process can be automated.

In this phase, the automation tool can also do an extra task: format the result in JSONL format. The JSONL file will serve as the final fine-tuning file for the next step. See the example below for a single JSONL record. However, don't get too excited just yet, as we have another crucial phase before feeding the file to the model.

{

"prompt": "What is Sudoku?",

"completion": "Sudoku is a logic-based, combinatorial number-placement puzzle. The objective is to fill a 9x9 grid with digits so that each column, each row, and each of the nine 3x3 subgrids contain all of the digits from 1 to 9."

}

This step's third and final phase is to review the questions and the answers manually. We must ensure that the training data does not include any offensive or inaccurate content. As you may have noticed, GPT's endpoint is either 'chat-completions' or 'completions'. This means GPT will try its best to complete the conversation instead of providing 100% accurate information. If we can't guarantee the quality of the fine-tuning data, it's a case of garbage in and out. We'll end up dealing with customer complaints sooner rather than later. So, this phase is critically important, and we simply cannot afford to cut corners.

Step 3: Create a Fine-Tuned Model.

In this step, we can select any GPT-3 models and experiment with them to determine which best suits our needs. This step is relatively straightforward. Begin by uploading the JSONL file, then proceed to create a fine-tuned model from it. The fine-tuned model is our custom model. We can refine it further or even delete it if we make a mistake. If the fine-tuning data was prepared in parallel during the previous step, our model could be trained topic-by-topic. Nevertheless, this isn't the final step. We need to rigorously test and verify the results before deploying our fine-tuned model in a production environment.

Upload a file: https://api.openai.com/v1/files

Create a fine-tune: https://api.openai.com/v1/fine-tunes

Step 4: Validate the Results and Continue Fine-Tuning the Model.

OpenAI provides tools for automatic result validation, but manual testing is indispensable and cannot be skipped. What could happen if we neglect this crucial validation phase? Let me share an illustrative example with you.

Prompt (me)

Can u explain Sudoku to me?

Completion (my not-so-fine-tuned model):

数独とは、9×9のマス目に1から9までの数字を入れるペンシルパズルの一種です。各行、各列、各3×3のブロックに1から9までの数字を重複しないように入れることがルールです。は、9×9のマス目に1から9までの数字を入れるペンシルパズルの一種です。各行、各列、各3×3のブロックに1から9までの数字を重複しないように入れることがルールです。

Unless our customers are fluent in Japanese, this sort of response could lead to customer complaints, which is something we certainly want to avoid.

Completion (Fine-Tuned GPT-3 Models)

At last, with the fine-tuned models completed, how do we employ them? GPT-3 models function differently from GPT-3.5 and GPT-4. The endpoint is https://api.openai.com/v1/completions. As the endpoint suggests, it's not a 'chat completion' but rather a 'simple completion'. We send GPT-3 a prompt, and it does its best to complete it. Let's see some examples to see how GPT-3 work with a different prompt.

Prompt

Roses are red

Completion

Violets are blue

Sugar is sweet

And so are you!

How romantic indeed! Now, let's insert more instructions into the prompt.

Prompt

I am a highly intelligent bot. If you tell me a fact, I will explain it.

Roses are red.

Completion

Roses are red because they contain a pigment called anthocyanin, which is a type of flavonoid. Anthocyanin is responsible for the red, purple, and blue colors in many flowers and fruits.

The GPT-3 model has already been fine-tuned and thus already has some knowledge about roses. That's great. But can GPT spot incorrect information in the prompt?

Prompt

I am a highly intelligent bot. If you tell me a fact, I will explain it.

Roses are blue.

Completion

The phrase "roses are blue" is a popular idiom used to express surprise or disbelief. It is often used in a humorous way to indicate that something is not true or is unlikely to happen.

In the above example, GPT detected the false statement and provided an interesting explanation that GPT itself could have entirely been invented. What will happen if GPT can't determine if the statement is true or false?

Prompt

I am a highly intelligent bot. If you tell me a fact, I will explain it.

Roses are red but will turn blue if frozen for 12 hours. The source is needed.

Completion

This fact is based on a study conducted by the University of Innsbruck in Austria. The study found that when roses were frozen for 12 hours, the red pigments in the petals were replaced by blue pigments. This is due to the fact that the cold temperatures cause the red pigments to break down, while the blue pigments remain intact.

Source: https://www.sciencedaily.com/releases/2015/02/150203091917.

Isn't it astonishing that GPT made up a fact and provided a fake link as its source?

This example should sufficiently highlight the importance of fine-tuning, emphasizing the need for diverse and comprehensive training data.

However, regardless of how extensive our fine-tuning process is, users may still pose questions that are out of scope. This is where prompts come into play; they ensure that GPT handles unusual questions in the manner that we require.

For instance, when a prompt indicates that the questions must be grounded in reality and related to Sudoku books, GPT will respond appropriately to unrelated or nonsensical inquiries.

Prompt

I am a highly intelligent question-answering bot. If you ask me a question that is based on reality and pertains to Sudoku books, I will provide the answer. However, if your query is nonsensical, intended to trick, or does not have a clear answer, I will respectfully decline, thereby maintaining the focus and relevance of the conversation.

In response to an unrelated question like 'Roses are red,' GPT will reply:

I'm sorry, but I cannot answer that question. However, if you have a question related to Sudoku books, I'd be more than happy to help.

In closing, it's clear that the use of conversational AI can greatly enhance user interactions and provide a more personalized experience, whether for ordering Sudoku books or any other specific task. The ability to maintain conversational context and provide relevant responses based on specific user queries, all while staying within the confines of the assistant's role, is a game changer. In the next part of this series, I will demonstrate how to connect the application to the service using AWS services. I will delve into the technical details of setting up the cloud infrastructure needed to support the AI model, so stay tuned for more insightful content.